Over the course of 30 years as an audio engineer, I’ve recorded thousands of voice sessions for commercials, TV shows, movie trailers, video games… you name it. It was always my favorite part of the job because it was social, it was creative, it was collaborative and it was FUN! You get to know and like the regular talent who frequent the studio, and humor, often inappropriate, is an ever-present part of the gig.

Many times, when a voice actor had to pop back in to the studio the next day to correct an error or to accommodate a fickle copywriter, he or she would quip, “You should just record me saying every word in the dictionary so I don’t have to keep coming back!” or “Someday a computer will be able to imitate my voice and then won’t I be screwed!”

HA, HA, Ha, Ha, ha, heh, h….. (cough) ummmmm

Siri, Alexa, Cortana … Text to Speech (TTS) has come a long way since Stephen Hawkings first found his electronic voice. Since then, advances in speech generation have generally centered around something called concatenative TTS. Basically what happens is this: A real human being (voice actor Susan Bennett in the case of Siri) records hours and hours of carefully concocted nonsense. The purpose is to generate a library of phonemes – fragments of speech – which can be recombined on the fly by very clever software to create a reasonable facsimile of human speech. The better the software, the better the results.

However, the result will always sound like the person who recorded the little bits. And of course, things like emotion, proper inflection, and even breath and mouth noise are completely lacking. That’s why, as cool as this little trick is, I’m not fooled into thinking that Siri is a real person who gets her feelings hurt when I curse her for directing me to Sugar’s Strip Club when I actually wanted to go to Sugar’s BBQ. (Either way, I’m going to end up smelling like something I shouldn’t have gone near.)

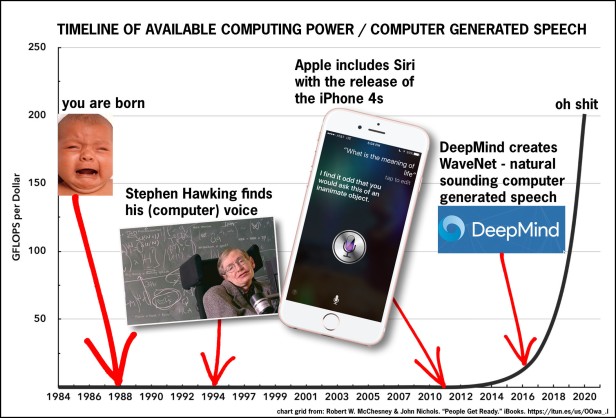

All in all, it’s impressive when it works, and it works pretty well. It’s the state of the art, given the current limitations of computing power. However, Moore’s Law is still alive and kicking, and we’re moving swiftly toward the fun end of the ‘exponential curve’. (see super scientific chart below) The deployment of neural networks is rapidly becoming less expensive as computing power explodes. Computationally expensive processes that require these neural nets, such as machine learning, become more feasible every day and they are all around us. So what does this mean for computer generated speech? Well, it would seem inevitable that audio be used to train such a system to recognize and then generate the components of human speech in much the same way that Google has trained their system to recognize faces in photographs. (Or cats, or cars and so on.)

In theory, one could then generate speech from the ground up – at the waveform level. In other words, no more Susan Bennett recording pages of gobbledygook and having computers reassembling speech fragments on the fly. (With the result being a heavily medicated sounding Susan Bennett.) Instead, imagine choosing a voice to speak your written text and ‘dialing in’ inflection, emotion, etc. Even tweaking various qualities of the voice itself. And then imagine never paying residuals, or a second session fee plus studio time for the inevitable revision;

“Gimme Donald Sutherland with just a tad of Edward Norton. No, that’s too much Norton… better. Now, let me make some changes on this script and then I’d like to hear it in a Susan Sarandon-esq kind of thing, but higher pitched.”

Every producers wet-dream. Every voice talent’s worst nightmare.

Enter WaveNet

DeepMind is perhaps THE leading applied research organization in the world of Artificial Intelligence. Founded by a couple of guys with resumes that cause one to speculate they may be aliens from the future, DeepMind was acquired by Google in 2014 and have been publishing academic papers and pushing the technology envelope ever since. Their mission statement:

“Solve intelligence. Use it to make the world a better place.”

Their latest research breakthrough is called WaveNet, and guess what it involves? You’re way ahead of me; neural-net-based realistic sounding speech that’s generated at the waveform level! Here’s what good old fashioned concatenative TTS sounds like:

And here’s what WaveNet sounds like:

Yeah, I know. Not quite ready for prime-time, but it certainly is clear where this could go, given ever larger training sets, more sophisticated modeling and the application of greater computational power. Perhaps in a few years, voice talent will spend their time and energy training a WaveNet model, and licensing it instead of hustling for gigs. Perhaps the models will become sophisticated enough for things like projection, pace, inflection and emotion to be controlled by on-screen sliders, allowing us to tailor a voice performance to our hearts content.

I know it sounds far fetched.

However, I imagine myself 15 years ago telling the owner of a leading video post production house that by 2016 I’ll be able to put together a video editing, visual effects and color correction suite that operates at four times the resolution of the HD equipment that they’d just sunk a million bucks into, and that I could do it for about $15,000. I’d have probably been laughed out of the room. I’ll never know, since few of those video post houses in my market exist anymore. Not that the talented people at those places are no longer making a living; they’re just doing it in a significantly different way. The smart ones have adapted.

Just like some visionary voice actors will probably do.

With very sweaty palms, I’ve been following the development of WaveNet too. It’ll be interesting to hear it try to tackle something long-form. My bets are on a bad version of Auto Announce.

I’ve said for years that the one thing artificially produced voice cannot simulate is emotional subtext. We humans can tell what someone means simply by how they say it—even with nonsense text. And from being on this side of the glass, directing people for 30 years, we know how subtle this can be. Computers (for the time being) are emotion-incapable, so whatever fakery it takes to simulate emotion would have to be human-driven (like the sliders you mention.)

I think one thing we voiceover people have to be wary of is the sampling of our voices for this. Case in point—my studio donated a lot of open summer studio space to a process called Vocal ID which gives speaking-impaired people a voice that is actually theirs.

It’s a concatenative process involving voice artists reading pages of nonsense phonemes. But here’s the interesting part: after we send the files, the speaking-impaired person makes utterances in their own voice. Vocal ID then takes the formant of their utterances and superimposes it on the concatenated phonemes. Result? Stephen Hawking’s speaking machine, but with the person’s own voice.

Perhaps we should all consider copyrighting/patenting/protecting our personal voice prints?

LikeLike

I guess it’s a good thing that I only plan to keep at this voice actor thing another 7 or 8 years.

LikeLike

I think the Hoyt-Tone Voice-o-Matic (TM) is gonna be HUGE! Passive income opportunity!

LikeLike

I love the visual rhetoric of this post. Great flow. Great use of negative space.

Also, it’s just super interesting in a kind of horrifying way.

LikeLike